There is an issue that we cannot use the same RootCA certificate for the Gateway and Developer Portal as one RootCA can cater to only one backend. We must change the RootCA certificate for the Developer Portal so that we can expose it. So, we will keep the old RootCA certificate for the Gateway and create a new RootCA and SSL cert for the Developer Portal, but we need to make sure that we don’t change the FQDN which is devportal.demo.com as we have used the same name in Private DNS Zone.

Create a new RootCA and SSL certificate:

- Let’s follow the same process I used in the first part of the blog.

C:\Work\study\APIM\demo\portal>openssl req -x509 -newkey rsa:4096 -keyout portal.key -out portal.crt -days 365 -nodes

...+.+..+...+....+.....+...+....+++++++++++++++++++++++++++++++++++++++++++++*.+......+............+........+...+....+++++++++++++++++++++++++++++++++++++++++++++*..+....+...+...+...+.....+.........+...........................+....+..+..........+.........+.....+...+.+.....+.........+...+..........+........+......................+.....+++++

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [AU]:NZ

State or Province Name (full name) [Some-State]:Wellington

Locality Name (eg, city) []:Wellington

Organization Name (eg, company) [Internet Widgits Pty Ltd]:student

Organizational Unit Name (eg, section) []:student

Common Name (e.g. server FQDN or YOUR name) []:fabrikamportal.com

Email Address []:

C:\Work\study\APIM\demo\portal>openssl pkcs12 -export -in portal.crt -inkey portal.key -out portal.pfx

Enter Export Password:

Verifying - Enter Export Password:

C:\Work\study\APIM\demo\portal>openssl req -newkey rsa:4096 -out demo.csr -keyout demo.key -nodes

...+....+..+.............+..+..........+...+.....+.+.....+.+........+.+............+....................+...+++++++++++++++++++++++++++++++++++++++++++++*...+....+.....+.+..+...+....+...+...+...+..+...+......+...+.+..+.......+......+..+.......+.....+...+..........++++++++++++++++++++

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [AU]:NZ

State or Province Name (full name) [Some-State]:Wellington

Locality Name (eg, city) []:Wellington

Organization Name (eg, company) [Internet Widgits Pty Ltd]:student

Organizational Unit Name (eg, section) []:student

Common Name (e.g. server FQDN or YOUR name) []:devportal.demo.com

Email Address []:

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:password

An optional company name []:

C:\Work\study\APIM\demo\portal>openssl x509 -req -in demo.csr -CA portal.crt -CAkey portal.key -CAcreateserial -out demo.crt -days 365

Certificate request self-signature ok

subject=C = NZ, ST = Wellington, L = Wellington, O = student, OU = student, CN = devportal.demo.com

C:\Work\study\APIM\demo\portal>openssl pkcs12 -export -in demo.crt -inkey demo.key -out demo.pfx

Enter Export Password:

Verifying - Enter Export Password:

C:\Work\study\APIM\demo\portal>

Commands:

- openssl req -x509 -newkey rsa:4096 -keyout portal.key -out portal.crt -days 365 -nodes

- openssl pkcs12 -export -in portal.crt -inkey portal.key -out portal.pfx

- openssl req -newkey rsa:4096 -out demo.csr -keyout demo.key -nodes

- openssl x509 -req -in demo.csr -CA portal.crt -CAkey portal.key -CAcreateserial -out demo.crt -days 365

- openssl pkcs12 -export -in demo.crt -inkey demo.key -out demo.pfx

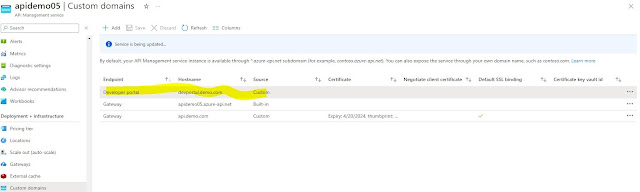

Update the new

SSL cert for the custom domain in the API Manager instance.

- Follow the same process I did in the first part

- Click the save button, it will take some time to get updated once done we will test the Developer Portal from the VM

- The certificate is saved now, let’s try to access the developer portal from the Virtual Machine.

All looking

good from the VM, and we can access the developer portal after changing the

certificate.

Open Application Gateway and add a new Listener for Developer Portal

Create a new listener for the portal with the below details, I am using port 80 to keep things simple

- Click on save

- Update the backend pool with the below details and make sure you use devportal.demo.com on your FQDN which we configured

Add

Backend Setting:

Add a backend

setting like the below details

- Change the certificate extension from .crt to .cer and upload it. This should be RootCA cert we just created for the developer portal

Your

setting should be similar below

Add

Rule:

- Add a new Rule for the Developer Portal

- Select PortalListner

- And update the Backend target and Backend Settings

- Click Save.

If all good,

then you should be able to see your developer portal exposed on http port 80

over public IP address, let’s test this out

The

developer portal is now exposed successfully.

Your Backend

Health must be healthy as mentioned below.

Expose

External APIs using path-based routing in Application Gateway.

I will

be creating a separate blog for path-based routing, but at a high level what we

do, I have written here

· https://<host_name>/api/produc

· https://<host_name>/api/employee

We must

create an API proxy for each service on API Manager with the below URL

API

Management Proxies:

· https://<host_name>/internal/api/product

· https://<host_name>/external/api/employee

We can now

create path-based routing for external API

Open

Application Gateway

- Delete the existing External Rule

- Add new External Rule

- Add path-based setting

- Give /external/* value in the Path which makes sure that all the API which has the external keyword in the URL can be accessed from Application Gateway

- Click add

- We can now provide details of this API to external customers and publish it through Developer Portal